Cloud computing 'could give EU 763bn-euro boost' Widespread adoption of cloud computing could give the top five EU economies a 763bn-euro (£645bn; $1tn) boost over five years, a report has said. The CEBR said it could also create 2.4m jobs. The technology gives software and computing power on demand over the net. But experts warn that cloud computing can be very disruptive to business, and companies could end up "disillusioned". "Nothing kills a new technology better than a poor user experience," said Damian Saunders of Citrix. “Moving to the cloud is a cultural shift as well as a technology shift” Dave Coplin Microsoft Cloud computing for business goes mainstream The report, by the Centre for Economics and Business Research (CEBR), was commissioned by EMC, a data storage and IT solutions firm that provides cloud computing services. The company is just one of many pushing into the sector, all saying that 2011 will be the year of the cloud, when the technology will find mainstream adoption. When a company uses cloud computing, it does not build all IT infrastructure by itself. Instead, it rents storage, computing power or software services from other companies. The services are accessed via the internet, which in network diagrams is shown as a cloud, hence the name. The cloud turns information technology into a utility, consumed like electricity or outsourced payroll services, says Chuck Hollis, EMC's chief technology officer. However, moving IT services to the cloud is more than just a technical upgrade. "Moving to the cloud is a cultural shift as well as a technology shift," warns Dave Coplin, until recently national technology officer at Microsoft UK. "The cloud is a tool, it's an enabler, but you have to think about the outcome: what is it that you are trying to do?" Biggest winners _50325751_googleservers.jpg Cloud computing makes more efficient use of servers Cloud computing does indeed help companies drive down IT costs; studies by technology analysts such as Nucleus Research have shown that using the cloud sharply cuts the energy used by computing. Cloud computing also makes it easier to use fewer computers to do the same amount of IT work, while the workload itself can be scaled up or down at an instant. The CEBR report suggests that the rapid uptake of "cloud computing service offerings [will make them] progressively cheaper as economies of scale take hold and service offerings increasingly mature". The authors of the CEBR study acknowledge that their estimates depend on numerous assumptions and uncertainties, but they forecast that by 2015 the European Union's top five economies - Germany, France, UK, Italy and Spain - could get an annual boost of 177bn euro, and create net new jobs of 466,000 a year. “The UK is the only country to show a disproportionately smaller share of the cloud computing benefits than the size of its economy might suggest.” Biggest winners The biggest winner in absolute numbers could be Germany, followed by the UK. However, if the gains are measured in relation to the size of the economy, Spain comes out on top while the UK comes bottom as "the only country to show a disproportionately smaller share of the cloud computing benefits than the size of its economy might suggest," the authors of the CEBR report say. Cloud disruption While the cloud is "a really cheap place to do business," according to Microsoft's Dave Coplin, it forces companies to change their IT culture and learn that it comes at a price. "People lose full control and flexibility, but get scalability and power in return," he says. Damian Saunders, in charge of the data centre and cloud group at software company Citrix, says there are four key drivers that are now accelerating the rate of cloud adoption. For starters, technology has improved, with better connectivity, higher internet speeds and virtualisation technologies that allow the more efficient use of servers. Then there are new business models, with companies not charging a big lump sum per software licence but on an "as-you-consume" basis. “Nothing kills the successful adoption of new technology better than a poor user experience.” Damian Saunders Citrix Consumerisation of IT is another driver. Mr Saunders calls it the "IT civil war" whereby every January "employees get a gadget for Christmas and then take it to work and don't understand why they can't use it". The move towards mobile computing, he says, is also driving the move towards cloud computing, which in turn is giving companies a competitive edge. Arguably the biggest driver is the state of the economy. Cloud computing allows companies to invest in growth while spreading the cost. Instead of a big up-front costs, IT investment becomes a continuing operating expenditure that rises and falls with demand. Until recently, says Mr Saunders, the risks of cloud computing "always overwhelmed the potential reward". This has changed now, he says, but also warns that "cloud is just reaching the peak of hype" that will soon end in disillusionment for those not prepared for the disruption it brings. Echoing Mr Coplin's warnings about a cultural shift, Mr Saunders says companies will have to learn that "cloud computing will never replace everything that went on before". Companies will have to work hard to make cloud computing user-friendly, because "nothing kills the successful adoption of new technology better than a poor user experience". And Sam Jardine of law firm Eversheds warns that cloud computing's "data security is not always as robust or legally compliant as good governance requires, and the cloud relies entirely on the speed and available bandwidth of the internet." "A robust service level agreement won't compensate you for loss of business if you can't access your applications whilst you experience connectivity issues. We would always advise having a Plan B in place." If companies get their roll-out of cloud solutions wrong, then all the optimistic forecasts - whether from the CEBR or others - will come to nought. Today in computer history 1906 - Grace Hopper is born. Back to the games boys and girls

When the C64 was launched in 1982 it immediately set the standard for 8-bit home computers. Its low cost, superior graphics, high quality sound and a massive 64 KB of RAM positioned it as the winner in the home computer wars, knocking out competitors from the likes of Atari, Texas Instruments, Sinclair, Apple and IBM. Selling over 30 million units and introducing a whole generation to computers and programming, the C64 shook up the video games industry and sparked cultural phenomena such as computer music and the demoscene. In recent years the C64 has enjoyed a spectacular revival manifesting itself once again as a retrocomputing platform. To allow you to experience and relive the wonders of this unique computer, Cloanto, developers of Commodore/Amiga software since the 1980s and creators of the famous Amiga Forever series, has introduced C64 Forever, a revolutionary preservation, emulation and support package. C64 Forever embodies an intuitive player interface, backed by a built-in database containing more than 5,000 C64 game entries, and advanced support for the new RP2 format, dubbed the "MP3 of retrogaming".  Over the past few years Cloanto have sent me various versions of their product Amiga Forever and asked that I review it on this blog and site. On this issue I am somewhat torn. On one hand I beleive that a format like the AMIGA which was a computing breakthrough and quite possible one of the most important developments in computing history should have its BIOS and Kickstart roms freely distributed in order to keep it alive. On the other hand Cloanto (who own the rights to the Amiga Kickstart ROM) have produced in AMIGA FOREVER one of the best all round collection of products, media, emulators and supporting material ever. It was obviously made with great passion and care towards preserving the AMIGA memory. I have now to say that I consider AMIGA FOREVER a must purchase for every and all serious retro gamers and past Amiga owners (like myself). That said (at long last)I set out to review the C64 FOREVER product. What can I say. It continues their excellent tradition of presenting you the user with a clean, concise all round product for easily emulating the mighty Commodore 64 computer. In closing I just want to say, thank you to Cloanto for your support and thank you for two great products, AMIGA FOREVER and C64 FOREVER.

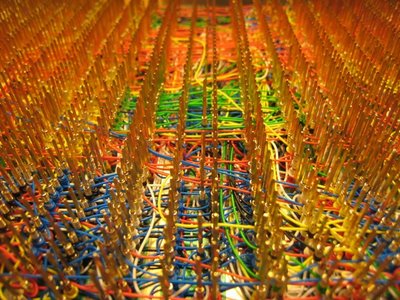

Intel’s fabrication plants can churn out hundreds of thousands of processor chips a day. But what does it take to handcraft a single 8-bit CPU and a computer? Give or take 18 months, about $1,000 and 1,253 pieces of wire. Steve Chamberlin, a Belmont, California, videogame developer by day, set out on a quest to custom design and build his own 8-bit computer. The homebrew CPU would be called Big Mess of Wires or BMOW. Despite its name, it is a painstakingly created work of art. “Computers can seem like complete black boxes. We understand what they do, but not how they do it, really,” says Chamberlin. “When I was finally able to mentally connect the dots all the way from the physics of a transistor up to a functioning computer, it was an incredible thrill.” The 8-bit CPU and computer will be on display doing an interactive chess demo at the fourth annual Maker Faire in San Mateo, California, this weekend, May 30-31. It will be one of 600 exhibits of do-it-yourself technology, hacks, mods and just plain strange hobby projects at the faire, which is expected to draw 80,000 attendees. The BMOW is closest in design to the MOS Technology 6502 processor used in the Apple II, Commodore 64 and early Atari videogame consoles. Chamberlin designed his CPU to have three 8-bit data registers, a 24-bit address size and 12 addressing modes. It took him about a year and a half from design to finish. Almost all the components come from the 1970s- and 1980s-era technology. “Old ’80s vintage parts may not be very powerful, but they’re easy to work with and simple to understand,” he says. “They’re like the Volkswagen Beetles of computer hardware. Nobody argues they’re the best but we love them for their simplicity.” To connect the parts, Chamberlin used wire wrapping instead of soldering. The technique involves taking a hollow, screwdriver-shaped tool and looping the wire through it to create a tight, secure connection. Wire wraps are seen as less prone to failures than soldered junctions but can take much longer to accomplish. Still, they offer one big advantage, says Chamberlin. “Wire wrapping is changeable,” he says. “I can unwrap and start over if I make a mistake. It is is much harder to recover from a mistake if you solder.” Chamberlin started with a a 12×7-inch Augat wire-wrap board with 2,832 gold wire-wrap posts that he purchased from eBay for $50. Eventually he used 1,253 pieces of wire to create 2,506 individually-wrapped connections, wrapping at the rate of almost 25 wires in an hour. “It’s like a form of meditation,” he wrote on his blog. “Despite how long it takes to wrap, the wire-wrapping hasn’t really impacted my overall rate of progress. Design, debugging, and general procrastination consume the most time.” The BMOW isn’t just a CPU. Chamberlin added a keyboard input, an LCD output that shows a strip of text, a USB connection, three-voice audio, and VGA video output to turn it into a functioning computer. The video circuitry, a UMC 70C171 color palette chip, was hard to come by, he says. When Chamberlin couldn’t find a source for it online, he went to a local electronics surplus warehouse and dug through a box of 20-year-old video cards. Two cards in there had the chip he needed, so he took one and repurposed it for his project. The use of retro technology and parts is essential for a home hobbyist, says Chamberlin. Working with newer electronics technology can be difficult because a lot of modern parts are surface-mount chips instead of having through-hole pins. That requires a wave soldering oven, putting them out of reach of non-professionals. After months of the CPU sitting naked on his desk, Chamberlin fashioned a case using a gutted X Terminal, a workstation popular in the early 1990s. “Why did I do all this?” he says. “I don’t know. But it has been a lot of fun.” Check out Steve Chamberlin’s log of how BMOW was built. Photo: Wire wrapped 8-bit CPU/Steve Chamberlin

Check out this cool old computer and console quiz posted on the BBC website this week. I managed to score 9 out of 10 on my first try. I slipped up on the NeoGeo question. Go on and have a go then post your score back here. - Code: Select all

http://news.bbc.co.uk/1/hi/magazine/7671677.stm

I kindly received a copy of this book from Winnie to review on the site. Over the years I have received many books on retro gaming and computing but none as concise and well photographed as this. Game.Machines is a beautiful book which analyses and describes some 450 console and micro releases across the globe between 1972 and 2005. The tome's total 224 pages contain more than 600 colour pictures (not fuzzy internet jpgs, but professionally photographed shots made exclusively for this book) and tons of info and nostalgia, tech infos and background stories. Rafael Dyll, musician on the R64 Volume 2 CD and the Revival ST album, has translated it from the German original -- in close collaboration with author Winnie Forster and Rockstar's David McCarthy. I reccomend this book to any real retro gaming/computing fan out there. It is a must have for your collection. Visit their site for more info and to order.

Computer History 1960 - 1980 Year Event 1960 IBMs 1400 series machines, aimed at the business market begin to be distributed. 1960 The Common Business-Oriented Language (COBOL) programming language is invented. 1960 Psychologist Frank Rosenblatt creates the Mark I Perception, which has an "eye" that can learn to identify its ABCs. 1960 NASA launches TIROS, the first weather satellite into space. 1960 Bob Bemer introduced the backslash. 1960 Physicist Theodore Maiman creates the first laser May 16, 1960. 1960 AT&T introduces the dataphone and the first known MODEM. 1960 RS-232 is introduced by EIA. 1960 IFIP is founded. 1960 Digital introduces the PDP-1 the first minicomputer. 1961 Hewlett-Packard stock is accepted by the New York Stock Exchange for national and international trading. 1961 Leonard Kleinrock publishes his first paper entitled "Information Flow in Large Communication Nets" is published May 31, 1961. 1961 General Motors puts the first industrial robot the 4,000 pound Unimate to work in a New Jersey factory. 1961 Accredited Standards Committee is founded, this committee later becomes the INCITS. 1961 P.Z. Ingerman develops a thunk. 1961 ECMA is established. 1961 The first transcontinental telegraph line began operation October 24, 1961. 1961 The programming language FORTRAN IV is created. 1962 Steve Russell creates "SpaceWar!" and releases it in February 1962. This game is considered the first game intended for computers. 1962 Philippe Kahn is born March 16, 1962. 1962 Leonard Kleinrock releases his paper talking about packetization. 1962 AT&T places first commercial communications Satellite, the Telstar I into orbit. 1962 Paul Baran suggests transmission of data using fixed size message blocks. 1962 J.C.R. Licklider becomes the first Director of IPTO and gives his vision of a galactic network. 1962 Philips invents the compact audio cassette tape. 1962 The NASA rocket, the Mariner II, is equipped with a Motorola transmitter on it's trip to Venus. 1962 Sharp is founded. 1963 IEEE is founded. 1963 The American Standard Code for Information Interchange (ASCII) is developed to standardize data exchange among computers. 1963 Kevin Mitnick is born August 6, 1963. 1963 Bell Telephone introduces the push button telephone November 18, 1963. 1964 Jeff Bezos is born January 12, 1964. 1964 Dartmouth Universitys John Kemeny and Thomas Kurtz develop Beginners All-purpose Symbolic Instruction Language (BASIC) and run it for the first time May 1, 1964. 1964 Baran publishes reports "On Distributed Communications." 1964 AT&T starts the practice of monitoring telephone calls in the hopes of identifying phreakers. 1964 The TRANSIT system becomes operational on U.S. Polaris submarines. This system later becomes known as GPS. 1964 On April 7, 1964 IBM introduces its System/360, the first of its computers to use interchangeable software and peripheral equipment. 1964 Leonard Kleinrock publishes his first book on packet nets entitled Communication Nets: Stochastic Message Flow and Design. 1964 The first computerized encyclopedia is invented at the Systems Development Corporation. 1965 Ted Nelson coins the term "hypertext," which refers to text that is not necessarily linear. 1965 Hypermedia is coined by Ted Nelson. 1965 Donald Davies coins the word "Packet." 1965 Engineers at TRW Corporation develop a Generalized Information Retrieval Language and System which later develops to the Pick Database Management System used today on Unix and Windows systems. 1965 Michael Dell is born February 23, 1965. 1965 Millions watch for the first time a space probe crashing into the moon on March 24, 1965. 1965 Texas Instruments develops the transistor-transistor logic (TTL). 1965 Lawrence G. Roberts with MIT performs the first long distant dial-up connection between a TX-2 computer n Massachusetts and a Q-32 in California. 1965 Gordon Moore makes an observation in a April 19, 1965 paper that later becomes widely known as Moore's Law. 1966 MITs Joseph Weizenbaum writes a program called Eliza, that makes the computer act as a psychotherapist. 1966 Lawrence G. Roberts and Tom Marill publish a paper about their earlier success at connecting over dial-up. 1966 David Filo is born April 20, 1966. 1966 Stephen Gray establishes the first personal computer club, the Amateur Computer Society. 1966 Robert Taylor joins ARPA and brings Larry Roberts there to develop ARPANET. 1966 The programming language BCPL is created. 1967 IBM creates the first floppy disk. 1967 The first CES is held in New York from the July 24 to 28, 1967. 1967 Donald Davies creates 1-node NPL packet net. 1967 Wes Clark suggests use of a minicomputer for network packet switch. 1967 The LOGO programming language is developed and is later known as "turtle graphics," a simplified interface useful for teaching children computers. 1967 Donald Davies creates 1-node NPL packet net. 1967 Ralph Baer creates "Chase", the first video game that was capable of being played on a television. 1967 HES is developed at the Brown University. 1967 Nokia is formed. 1967 GPS becomes available for commercial use. 1967 ISACA is established. 1968 Intel Corporation is founded by Robert Noyce and Gordon Moore. 1968 The first Network Working Group (NWG) meeting is held. 1968 Bob Propst invents the office cubicle. 1968 Larry Roberts publishes ARPANET program plan on June 3, 1968. 1968 On June 4, 1968 Dr. Robert Dennard at the IBM T.J. Watson Research center is granted U.S. patent 3,387,286 describing a one-transistor DRAM cell. 1968 First RFP for a network goes out. 1968 UCLA is selected to be the first node on the Internet as we know it today and serve as the Network Msmnt Center. 1968 The movie "2001: A Space Odyssey" is released. 1968 SHRDLU is created. 1968 Seiko markets a miniature printer for use with calculators. 1968 Sony invents Trinitron. 1968 Jerry Yang is born November 6, 1968. 1968 Doug Englebart publicly demonstrates Hypertext on the NLS on December 9, 1968. 1969 Control Data Corporation led by Seymour Cray, release the CDC 7600, considered by most to be the first supercomputer. 1969 AT&T Bell Laboratories develop Unix. 1969 Steve Crocker releases RFC #1 on April 7, 1979 introducing the Host-to-Host and talking about the IMP software. 1969 AMD is founded on May 1, 1969. 1969 Gary Starkweather, while working with Xerox invents the laser printer. 1969 UCLA puts out a press release introducing the public to the Internet on July 3, 1969. 1969 Ralph Baer files for a US Patent on August 21, 1969 that describes playing games on a television and would later be a part of the Magnavox Odyssey. 1969 On August 29, 1969 the first network switch and the first piece of network equipment (called "IMP", which is short for Interface Message Processor) is sent to UCLA. 1969 The first U.S. bank ATM went into service at 9:00am on September 2, 1969. 1969 On September 2, 1969 the first data moves from UCLA host to the IMP switch. 1969 Charley Kline a UCLA student tries to send "login", the first message over ARPANET at 10:30 p.m on October 29, 1969. The system transmitted "l" and then "o" but then crashed making today the first day a message was sent over the Internet and the first network crash. 1969 CompuServe, the first commercial online service, is established. 1969 Linus Torvalds is born December 28, 1969. 1970 Western Digital is founded. 1970 Steve Crocker and UCLA team releases NCP. 1970 Intel announces the 1103, a new DRAM memory chip containing more than 1,000 bits of information. This chip is classified as random-access memory (RAM). 1970 The Xerox Palo Alto Research Center (PARC) is established to perform basic computing and electronic research. 1970 The forth programming language is created by Charles H. Moore. 1970 U.S. Department of Defense develops ada a computer programming language capable of designing missile guidance systems. 1970 Intel introduces the first microprocessor, the Intel 4004 on November 15, 1971. 1970 The Sealed Lead Acid battery begins being used for commercial use. 1970 Jack Kilby is awarded the National Medal of Science. 1970 Philips introduces the VCR. 1970 Centronics introduces the first dot matrix printer. 1970 Douglas Englebart gets a patent for the first computer mouse on November 17, 1970. 1970 IBM introduces the System/370, which included the use of Virtual Memory and utilized memory chips instead of magnetic core technology. 1971 The first 8" floppy diskette drive was introduced. 1971 Ray Tomlinson sends the first e-mail, the first messaging system to send messages across a network to other users. 1971 The first laser printer is developed at Xerox PARC. 1971 FTP is first purposed. 1971 IBM introduces its first speech recognition program capable of recognizing about 5,000 words. 1971 Nolan Bushnell and Ted Dabney create the first arcade game called "Computer Space." 1971 SMC is founded. 1971 Steve Wozniak and Bill Fernandez develop a computer called the Cream Soda Computer. 1971 Schadt and Helfrich develop twisted nematic. 1971 Niklaus Wirth invents the Pascal programming language. 1971 Intel develops the the first processor, the 4004 1971 First edition of Unix released November 03, 1971. The first edition of the "Unix PROGRAMMER'S MANUAL [by] K. Thompson [and] D. M. Ritchie." It includes over 60 commands like: b (compile B program); boot (reboot system); cat (concatenate files); chdir (change working directory); chmod (change access mode); chown (change owner); cp (copy file); ls (list directory contents); mv (move or rename file); roff (run off text); wc (get word count); who (who is one the system). The main thing missing was pipes. 1972 Intel introduces the 8008 processor on April 1, 1972. 1972 The first video game console called the Magnavox Odyssey is demonstrated May 24, 1972 and later released by Magnavox and sold for $100.00 USD. 1972 ARPA is renamed to DARPA. 1972 The programming language FORTRAN 66 is created. 1972 Dennis Ritchie at Bell Labs invents the C programming language. 1972 Edsger Dijkstra is awarded the ACM Turning Award. 1972 The compact disc is invented in the United States. 1972 Cray Research Inc. is founded. 1972 Atari releases Pong, the first commercial video game on November 29, 1972. 1972 First public demo of ARPANET. 1972 Whetstone is first released in November 1972. 1972 Norm Abramson' Alohanet connected to ARPANET: packet radio nets. 1973 Vinton Cerf and Robert Kahn design TCP during 1973 and later publish it with the help of Yogen Dalal and Carl Sunshine in December of 1974 in RFC 675. 1973 ARPA deploys SATNET the first international connection. 1973 Dr. Martin Cooper makes the first handheld cellular phone call to Dr. Joel S. Engel April 3, 1973. 1973 Robert Metcalfe creates the Ethernet at the Xerox Palo Alto Research Center (PARC) on May 22, 1973. 1973 The first VoIP call is made. 1973 IBM introduces its 3660 Supermarket System, which uses a laser to read grocery prices. 1973 Interactive laser discs make their debut. 1973 The ICCP is founded. 1973 Judge awards John Vincent Atanasoff as the inventor of the first electronic digital computer on October 19, 1973. 1974 Intel's improved microprocessor chip is introduced April 1, 1974, the 8080 becomes a standard in the computer industry. 1974 The U.S. government starts its antitrust suit against AT&T and doesn't end until 1982 when AT&T agrees to divest itself of the wholly owned Bell operating companies that provided local exchange service. 1974 John Draper aka Captain Crunch discovers a breakfast cereal children's whistle creates a 2600 hertz tone. Using this whistle and a blue box he's able to successfully get into AT&T's phone network and make free calls anywhere in the world. 1974 The first Toshiba floppy disk drive is introduced. 1974 The IBM MVS operating system is introduced. 1974 A commercial version of ARPANET known as Telenet is introduced and considered by many to be the first Internet Service Provider (ISP). 1974 IBM develops SEQUEL, which today is known as SQL today. 1974 IBM introduces SNA. 1974 Charles Simonyi coins the term WYSIWYG. 1974 Altair 8800 kits start going on sale December 19, 1974. 1975 Bill Gates and Paul Allen Establish Microsoft April 4, 1975. 1975 Bill Gates, Paul Allen, and Monte Davidoff announce Altair BASIC. 1975 MITS ships one of the first PCs, the Altair 8800 with one kilobyte (KB) of memory. The computer is ordered as a mail-order kit for $397.00. 1975 A flight simulator demo is first shown. 1975 Paul Allen and Bill Gates write the first computer language program for personal computers, which is a form of BASIC designed for the Altair. Gates later drops out of Harvard and founds Microsoft with Allen. 1975 Xerox exits the computer market on July 21, 1975. 1975 The Byte Shop, one of the first computer stores, open in California. 1975 EPSON enters the US market. 1975 IMS Associates begin shipping its IMSAI 8080 computer kits on December 16, 1975. 1976 On February 3, 1976 David Bunnell publishes an article by Bill Gates complaining about software piracy in his Computer Notes Altair newsletter. 1976 Intel introduces the 8085 processor on March 1976. 1976 Steve Wozniak designs the first Apple, the Apple I computer in 1976, later Wozniak and Steve Jobs co-found Apple Computers on April Fools day. 1976 The first 5.25-inch floppy disk is invented. 1976 Microsoft introduces an improved version of BASIC. 1976 The First Annual World Altair Computer convention and first convention of computer hobbyists is held in New Mexico on March 26, 1976. 1976 The term meme is first defined in the book The Selfish Gene by Richard Dawkins. 1976 The first Public Key Cryptography known as the Deffie-Hellman is developed by Whitfield Deffie and Martin Hellman. 1976 The Intel 8086 is introduced June 8, 1976. 1976 The NASA Viking 2 lands on Mars September 3, 1976 and transmits pictures and soil analysis. 1976 The original Apple computer company logo of Sir Isaac Newton sitting under an apple tree is replaced by the well known rainbow colored apple with a bite out of it. 1976 Matrox is founded. 1976 DES is approved as a federal standard in November 1976. 1976 Jack Dorsey is born November 19, 1976. 1976 Microsoft officially drops the hyphen in Micro-soft and trademarks the Microsoft name November 26, 1976. 1976 In December of 1976 Bill Gates drops out of Harvard to devote all his time to Microsoft. 1977 Ward Christansen develops a popular modem transfer modem called Xmodem. 1977 Apple Computer becomes Incorporated January 4, 1977 1977 Apple Computer Inc., Radio Shack, and Commodore all introduce mass-market computers. 1977 Kevin Rose is born February 21, 1977. 1977 The First West Coast Computer Faire in San Francisco's Brooks Civic Auditorium is held on April 15, 1977. 1977 Apple Computers Apple II, the first personal computer with color graphics is demonstrated. 1977 ARCNET the first commercially network is developed 1977 Zoom Telephonics is founded. 1977 Commodore announces that the PET (Personal Electronic Transactor) will be a self-contained unit, with a CPU, RAM, ROM, keyboard, monitor and tape recorder all for $495.00 1977 Microsoft sells the license for BASIC to Radio Shack and Apple and introduces the program in Japan. 1977 Tandy announces it will manufacture the TRS-80, the first mass-produced computer on August 3, 1977. 1977 BSD is introduced. 1978 Dan Bricklin creates VisiCalc. 1978 The first BBS is put online February 16, 1978. 1978 TCP splits into TCP/IP driven by Danny Cohen, David Reed, and John Shoch to support real-time traffic. This allows the creation of UDP. 1978 Epson introduces the TX-80, which becomes the first successful dot matrix printer for personal computers. 1978 OSI is developed by ISO. 1978 Roy Trubshaw and Richard Bartle create the first MUD. 1978 The first spam e-mail was sent by Gary Thuerk in May 1, 1978 an employee at Digital who was advertising the new DECSYSTEM-2020, 2020T, 2060, AND 2060T on ARPAnet. 1978 Microsoft introduces a new version of COBOL. 1978 The 5.25-inch floppy disk becomes an industry standard. 1978 In June of 1978 Apple introduces Apple DOS 3.1, the first operating system for the Apple computers. 1978 Ward Christensen and Randy Seuss have the first major microcomputer bulletin board up and running in Chicago. 1978 ETA is founded. 1978 John Shoch and Jon Hupp at Xerox PARC develop the first worm. 1979 Robert Williams of Michigan became the first human to be killed by a robot at the Ford Motors company on January 25, 1979. Resulting in a $10 million dollar lawsuit. 1979 Software Arts Incorporated VisiCalc becomes the first electronic spreadsheet and business program for PCs. 1979 Epson releases the MX-80 which soon becomes an industry standard for dot matrix printers. 1979 SCO is founded. 1979 Sierra is founded. 1979 The Intel 8088 is released on June 1, 1979. 1979 Bit 3 is founded. 1979 Texas Instruments enters the computer market with the TI 99/4 personal computer that sells for $1,500. 1979 Hayes markets its first modem which becomes the industry standard for modems. 1979 Atari introduces a coin-operated version of Asteroids. 1979 More than half a million computers are in use in the United States. 1979 3COM is founded by Robert Metcalfe. 1979 Oracle introduces the first commercial version of SQL. 1979 The programming language DoD-1 is officially changed to Ada. 1979 The Motorola 68000 is released and is later chosen as the processor for the Apple Macintosh. 1979 Phoenix is founded. 1979 VMS is introduced. 1979 CompuServe becomes the first commercial online service offering dial-up connection to anyone September 24, 1979. 1979 Usenet is first started. 1979 Bit 3 is established. 1979 Seagate is founded. 1979 Saitek is founded 1979 Oracle is founded. 1979 Novell Data System is established as an operating system developer. Later in 1983 the company becomes the Novell company. Today in computer history 2004 - IBM sells its computing division to Lenovo Group for $1.75 billion. Back to the games boys and girls.

Internet History Year Event 1960 AT&T introduces the dataphone and the first known MODEM. 1961 Leonard Kleinrock publishes his first paper entitled "Information Flow in Large Communication Nets" is published May 31, 1961. 1962 Leonard Kleinrock releases his paper talking about packetization. 1962 Paul Baran suggests transmission of data using fixed size message blocks. 1962 J.C.R. Licklider becomes the first Director of IPTO and gives his vision of a galactic network. 1964 Baran publishes reports "On Distributed Communications." 1964 Leonard Kleinrock publishes his first book on packet nets entitled Communication Nets: Stochastic Message Flow and Design. 1965 Lawrence G. Roberts with MIT performs the first long distant dial-up connection between a TX-2 computer in Massachusetts and Tom Marill with a Q-32 at SDC in California. 1965 Donald Davies coins the word "Packet." 1966 Lawrence G. Roberts and Tom Marill publish a paper about their earlier success at connecting over dial-up. 1966 Robert Taylor joins ARPA and brings Larry Roberts there to develop ARPANET. 1967 Donald Davies creates 1-node NPL packet net. 1967 Wes Clark suggests use of a minicomputer for network packet switch. 1968 Doug Englebart publicly demonstrates Hypertext on December 9, 1968. 1968 The first Network Working Group (NWG) meeting is held. 1968 Larry Roberts publishes ARPANET program plan on June 3, 1968. 1968 First RFP for a network goes out. 1968 UCLA is selected to be the first node on the Internet as we know it today and serve as the Network Msmnt Center. 1969 Steve Crocker releases RFC #1 on April 7, 1979 introducing the Host-to-Host and talking about the IMP software. 1969 UCLA puts out a press release introducing the public to the Internet on July 3, 1969. 1969 On August 29, 1969 the first network switch and the first piece of network equipment (called "IMP", which is short for Interface Message Processor) is sent to UCLA. 1969 On September 2, 1969 the first data moves from UCLA host to the IMP switch. 1969 CompuServe, the first commercial online service, is established. 1970 Steve Crocker and UCLA team releases NCP. 1971 Ray Tomlinson sends the first e-mail, the first messaging system to send messages across a network to other users. 1972 First public demo of ARPANET. 1972 Norm Abramson' Alohanet connected to ARPANET: packet radio nets. 1973 Vinton Cerf and Robert Kahn design TCP during 1973 and later publish it with the help of Yogen Dalal and Carl Sunshine in December of 1974 in RFC 675. 1973 ARPA deploys SATNET the first international connection. 1973 Robert Metcalfe creates the Ethernet at the Xerox Palo Alto Research Center (PARC). 1973 The first VoIP call is made. 1974 A commercial version of ARPANET known as Telenet is introduced and considered by many to be the first Internet Service Provider (ISP). 1978 TCP splits into TCP/IP driven by Danny Cohen, David Reed, and John Shoch to support real-time traffic. This allows the creation of UDP. 1978 John Shoch and Jon Hupp at Xerox PARC develop the first worm. 1981 BITNET is founded. 1983 ARPANET standardizes TCP/IP. 1984 Paul Mockapetris and Jon Postel introduce DNS. 1986 Eric Thomas develops the first Listserv. 1986 NSFNET is created. 1986 BITNET II is created. 1988 First T-1 backbone is added to ARPANET. 1988 Bitnet and CSNET merge to create CREN. 1990 ARPANET replaced by NSFNET. 1990 The first search engine Archie, written by Alan Emtage, Bill Heelan, and Mike Parker at McGill University in Montreal Canada is released on September 10, 1990 1991 Tim Berners-Lee introduces WWW to the public on August 6, 1991. 1991 NSF opens the Internet to commercial use. 1992 Internet Society formed. 1992 NSFNET upgraded to T-3 backbone. 1993 The NCSA releases the Mosaic browser. 1994 WXYC (89.3 FM Chapel Hill, NC USA) becomes first traditional radio station to announce broadcasting on the Internet November 7, 1994. 1995 The dot-com boom starts. 1995 The first VoIP software (Vocaltec) is released allowing end users to make voice calls over the Internet. 1996 Telecom Act deregulates data networks. 1996 More e-mail is sent than postal mail in USA. 1996 CREN ended its support and since then the network has cease to exist. 1997 Internet2 consortium is established. 1997 IEEE releases 802.11 (WiFi) standard. 1998 Internet weblogs begin to appear. 1999 Napster starts sharing files in September of 1999. 1999 On December 1, 1999 the most expensive Internet domain name business.com was sold by Marc Ostrofsky for $7.5 Million The domain was later sold on July 26, 2007 again to R.H. Donnelley for $345 Million USD. 2000 The dot-com bubble starts to burst. 2003 January 7, 2003 CREN's members decided to dissolve the organization. Back to the games boys and girls

Evolution and Selection of File Transfer Protocols By Chuck Forsberg Chuck Forsberg's fame comes in part from developing the YMODEM and ZMODEM file transfer protocols. ZMODEM is a file transfer protocol with error checking and crash recovery. ZMODEM does not wait for positive acknowledgment after each block is sent, but rather sends blocks in rapid succession. If a ZMODEM transfer is cancelled or interrupted for any reason, the transfer can be resurrected later and the previously transferred information need not be resent. Chuck Forsberg designed YMODEM as a file transfer protocol for use between modems and developed it as the successor to XMODEM and MODEM7, It was formally given the name "YMODEM" (Yet another Modem implementation) in 1985 by Ward Christensen. 150 years after Sam Morse developed the electrical telegraph, people are still devising new protocols for controlling data communications. Hardly a month passes without a new program claiming to transfer your files faster or better than ever. That sounds perfectly reasonable, since today's personal computer may be twice as fast as last year's screamer. But which protocol or program should you use? The selection of the best file transfer protocol for your application is important but not obvious. A poor protocol can waste your time, patience and data. In the next few articles I hope to teach you how to choose the file transfer protocols best suited for your needs. I also hope to teach how you can get the best possible results with those protocols. What should you look for in a file transfer protocol? While not mentioned very often in magazine articles, a protocol's reliability is a paramount concern. What good is it to transfer a file at three times the speed of light if we don't get it right? We'll return to the question of reliability throughout this series because reliability in file transfers is all important. TRANSFER SPEED File transfer speed is a hot topic today. Who wants to waste time and bloat phone bills with slow file transfers? No survey of data communications software is "complete" without some attempt at comparing performance of different programs. Some surveys tabulate simple speed measurements with impressive three dimensional color charts that look to be very meaningful. Unfortunately, tests of file transfers under sanitized laboratory conditions may not have much relevance to your needs. After all, if you only had to move data across a desktop or two, you'd use a 10 megabit LAN or just swap disks. A protocol's speed is profoundly affected by the application and environment it is used in. The original 1970's Ward Christensen file transfer protocol ("XMODEM") was more than 90 per cent efficient with the 300 bit per second modems then popular with microcomputers. XMODEM obliged both the sender and the receiver to keyboard a file name, and only one file could be sent at a time. You could go out and have a cup of coffee while a disk's worth of files were moving over the wire. The first extension to XMODEM was batch transfer. Batch transfer protocols allow many files to be sent with a single command. Even if you only need to send one file, a batch protocol is valuable because it saves you from typing the filenames twice. MODEM7 BATCH (sometimes called BATCH XMODEM) sends the file name ahead of each file transferred with XMODEM. MODEM7 BATCH had a number of shortcomings which provided the mother of invention for alternative XMODEM descendants. MODEM7 BATCH sent file names one character at a time, a slow and error prone process. One such alternative was the batch protocol introduced by Chuck Forsberg's CP/M "YAM" program. YMODEM transmits the file pathname, length, and date in a regular XMODEM block, and transmits the file with XMODEM. Unlike MODEM7 BATCH, the YMODEM pathname block was sent as reliably and quickly as a regular data block. In 1985 Ward Christensen invented the name YMODEM to identify this protocol. While we're discussing speed, let's not forget that batch protocols were developed to increase the speed of real life transfer operations, not magazine benchmarks. (What would DOS be without "COPY *.*"?) When studying magazine reviews comparing the speeds of different file transfer protocols, you must add the extra time it takes to type in file names to the listed transfer times for protocols that make you keyboard this information twice. By 1985 personal computer owners were upgrading their 300 bit per second (bps) modems to 1200 and 2400 bps units. But while a 2400 bps modem transmits bits eight times faster than a 300 bps modem, 2400 bps XMODEM transfers are nowhere near eight times as fast as 300 bps XMODEM transfers. This was a keen disappointment to owners of these new $1000 modems. What causes this disappointing XMODEM file transfer performance? The culprit is transit time, the same phenomenon that makes satellite telephone conversations so frustrating. XMODEM, YMODEM, Jmodem and related protocols stop at the end of each block of data to wait for an acknowledgement that the receiver has correctly received the data. Even if the sending and receiving programs processed the data and acknowledgements in zero time (some come close), XMODEM cannot do anything useful while the receiver's acknowledgement is making its way back to the sender. The length of this delay has increased tremendously in the last decade. The 300 bps modems prevalent in XMODEM's infancy did not introduce significant delays. Today's high speed modems and networks can introduce delays that more than double the time to send an XMODEM data block. An easy performance enhancement was to increase of the XMODEM/YMODEM data block length from 128 bytes to 1024. This reduced protocol overhead by 87 percent, not bad for a few dozen lines of code. Other protocols such as Jmodem and Long Packet Kermit allow even longer data blocks. Long packet protocols gave good results under ideal conditions, but speed and reliability fell apart when conditions were less than ideal. COMPATIBILITY Programs with non-standard "YMODEM" have plagued YMODEM users since the early days of the protocol. Some programmers simply refused to abide by Ward Christensen's definition of YMODEM as stated in his 1985 message introducing the term. Today more and more programs are being brought into compliance with the YMODEM standard, but as of this writing some widely marketed programs including CROSSTALK still don't meet the standard. Even when both the sending and receiving programs agree on the protocol, XMODEM and YMODEM do not always work in a given application. Some applications depend on wide area networks which use control characters to control their operation. Some mainframe computers have similar restrictions on the transmission and reception of control characters. (ASCII control characters are reserved for controlling devices and networks instead of displaying printing characters.) Since XMODEM and YMODEM use all possible 256 character codes for transferring data and control information, some of XMODEM's data appears as control characters and will be "eaten" by the network. This confusion sinks XMODEM, possibly taking your phone call or even a terminal port down with it. The Kermit protocol was developed at Columbia University to allow file transfers in computer environments hostile to XMODEM. This feature makes Kermit an essential part of any general purpose communications program. Kermit avoids network sensitive control characters with a technique called control character quoting. If a control character appears in the data, Kermit sends a special printing character that indicates the printing character following should be translated to its control character equivalent. In this way, a Control-P character (which controls many networks) may be sent as "#P". Likewise, Kermit can transmit characters with the parity bit (8th bit) set as "&c" where "c" is the corresponding character without the 8th bit. When sending ARC and ZIP files, this character translation in combination with character quoting adds considerable overhead with a corresponding decrease in the speed of data transfer. The original and most popular form of Kermit uses packets that are even shorter than XMODEM's 128 byte blocks. Kermit was designed to work with computers that choked on input that doesn't look very much like regular text, so limiting packet length to one line of text was quite logical. Kermit Sliding Windows ("SuperKermit") improved throughput over networks at the cost of increased complexity. As with regular Kermit, SuperKermit sends an ACK packet for each 96 byte data packet. Unlike regular Kermit, the SuperKermit sender does not wait for each packet to be acknowledged. Instead, SuperKermit programs maintain a set of buffers that allow the sender to "get ahead" of the receiver by a specified amount (window size) up to several thousand bytes. By sending ahead, SuperKermit can transmit data continuously, largely eliminating the transmission delays that slow XMODEM and YMODEM. For a number of technical reasons, SuperKermit has not been widely accepted. Those computers capable of running SuperKermit usually support the simpler YMODEM-1k or more efficient ZMODEM protocols. However, the lessons I learned from YMODEM's and SuperKermit's development were to serve me later in the design of ZMODEM. Recent changes in the Kermit protocol have allowed knowledgeable users to remove much of Kermit's overhead in many environments. At the same time, Columbia University placed restrictive Copyright restrictions on their Kermit code, and few developers have the expertise to upgrade the older "The Source" SuperKermit code to the current protocol. The computing resources needed to support higher speed Kermit transfers have also deterred Kermit's widespread deployment. Currently, Omen Technology's Professional-YAM and ZCOMM are the only programs not marketed by Columbia University that include the recent Kermit performance enhancements. ZMODEM In early 1986, Telenet funded a project to develop an improved public domain application to application file transfer protocol. This protocol would alleviate the throughput problems their network customers were experiencing with XMODEM and Kermit file transfers. As I started work on what was to become ZMODEM, I hoped a few simple modifications to XMODEM technology could provide high performance and reliability over packet switched networks while preserving XMODEM's simplicity. YMODEM and YMODEM-1k were popular because programmers inexperienced in protocol design could, more or less, support the newer protocols without major effort, and I wanted the new protocol to be popular. The initial concept added block numbers to XMODEM's ACK and NAK characters. The resultant protocol would allow the sender to send more than one block before waiting for a response. But how should the new protocol add a block number to XMODEM's ACK and NAK? The WXMODEM, SEAlink, and Megalink protocols use binary bytes to indicate the block number. After careful consideration, I decided raw binary was unsuitable for ZMODEM because binary codes do not pass backwards through some modems, networks and operating systems. But there were other problems with the streaming ACK technique used by SuperKermit, SEAlink, and Unix's UUCP-g protocols. Even if the receiver's acknowledgements were sent as printing digits, some operating systems could not recognize ACK packets coming back from the receiver without first halting transmission to wait for a response. There had to be a better way. Another problem that had to be addressed was how to manage "the window". (The window is the data in transit between sender and receiver at any given time.) Experience gained in debugging The Source's SuperKermit protocol indicated a window size of about 1000 characters is needed to fully drive Telenet's network at 1200 bps. A larger window is needed in higher speed applications. Some high speed modems require a window of 20000 or more characters to achieve full throughput. Much of the SuperKermit's inefficiency, complexity, and debugging time centered around its ring buffering and window management logic. Again, there had to be a better way. A sore point with XMODEM and its progeny is error recovery. More to the point, how can the receiver determine whether the sender has responded, or is ready to respond, to a retransmission request? XMODEM attacks the problem by throwing away characters until a certain period of silence has elapsed. Too short a time allows a spurious pause in output (network or timesharing congestion) to masquerade as error recovery. Too long a timeout devastates throughput, and allows a noisy line to "lock up" the protocol. Kermit and ZMODEM solve this problem with a distinct start of packet. A further error recovery problem arises in streaming protocols. How does the receiver know when (or if) the sender has recognized its error signal? Is the next packet the correct response to the error signal? Is it something left over "in the queue" from before the sender received the error signal? Or is this new subpacket one of many that will have to be discarded because the sender did not receive the error signal? How long should this continue before sending another error signal? How can the protocol prevent this from degenerating into an argument about mixed signals? For ZMODEM, I decided to forgo the complexity of SuperKermit's packet assembly scheme and its associated buffer management logic and memory requirements. ZMODEM uses the actual file position in its headers instead of block numbers. While a few more bytes are required to represent the file position than a block number, the protocol logic is much simpler. Unlike XMODEM, YMODEM, SEAlink, Kermit, etc., ZMODEM cannot get "out of sync" because the range of synchronization is the entire file length. ZMODEM is not a single protocol in the sense of XMODEM or YMODEM. Rather, ZMODEM is an extensible language for implementing protocols. The next article in this series will show how ZMODEM can be adapted for vastly different environments. ZMODEM normally sends data non-stop and the receiver is silent unless an error is detected. When required by the sender's operating system or network, the sending program can specify a break signal or other interrupt sequence for the receiver to use when requesting error correction. To simplify logic and minimize memory consumption, most ZMODEM programs return to the point of error when retransmitting garbled data. While not as elegant as SuperKermit's selective retransmission logic, ZMODEM avoids the considerable overhead required to support selective retransmission. My experience with SuperKermit's selective retransmission indicates ZMODEM does not suffer from this lack of selective retransmission. If selective retransmission is required, ZMODEM is extensible enough to support it. Yet another sore point with XMODEM is the garbage XMODEM adds to files. This was acceptable in the days of CP/M when files which had no exact length. It is not desirable with contemporary systems such as DOS and Unix. Full YMODEM uses the file length information transmitted in the header block to trim garbage from the output file, but this causes data loss when transferring files that grow during a transfer. In some cases, the file length may be unknown, as when data is obtained from a "pipe". ZMODEM's variable length data subpackets solve both of these problems. Since ZMODEM has to be "network friendly", certain control characters are escaped. Six network control characters (DLE, XON and XOFF in both parities) and the ZMODEM flag character (Ctrl-X) are replaced with two character sequences when they appear in raw data. This network protection exacts a speed penalty of about three percent when sending compressed files (ARC, ZOO, ZIP, etc.). Most, but not all, users feel this is a small penalty to pay for a protocol that works properly in many network applications where XMODEM, YMODEM, SEAlink, et al. fail. Since some characters had to be escaped anyway, there wasn't much point wasting bytes to fill out to a fixed packet length. In ZMODEM, the length of data subpackets is denoted by ending each subpacket with an escape sequence similar to BISYNC and HDLC. The end result was a ZMODEM header containing a "frame type", supervisory information, and a CRC protecting the header's information. Data frames consist of a header followed by 1 or more data subpackets. (A data subpacket consists of 0 or 1024 bytes of data followed by a 16 or 32 bit CRC.) In the absence of transmission errors, an entire file can be sent in one data frame. The ZMODEM "block length" can be as long as the entire file, yet error correction can begin within 1024 bytes of the garbled data. Unlike XMODEM et al., "Block Length" issues do not apply to ZMODEM. People who talk about "ZMODEM block length" in the same breath as XMODEM/YMODEM block length simply do not understand ZMODEM. Since the sending system may be sensitive to numerous control characters or strip parity in the reverse data path, all of the headers sent by the ZMODEM receiver are in hex. With equivalent binary (efficient) and hex (application friendly) frames, the sending program can send an "invitation to receive" sequence to activate the receiver without crashing the remote application with many unexpected control characters. Going "back to scratch" in the protocol design allowed me to steal many good ideas from the existing protocols while adding a minimum of brand new ideas. (It's usually the new ideas that don't work right at first.) From Kermit and Unix's UUCP came the concept of an initial dialog to exchange system parameters. Kermit inspired ZMODEM headers. The concatenation of headers and an arbitrary number of data subpackets into a frame of unlimited length was inspired by the ETB character in IBM's BISYNC. ZMODEM generalized CompuServe Protocol's concept of host controlled transfers to provide ZMODEM AutoDownloadTM. An available Security Challenge prevents password hackers from abusing ZMODEM's power. With ZMODEM automatic downloads, the host program can automate the complete file transfer process without requiring any action on the user's part. We were also keen to the pain and $uffering of legions of telecommunicators whose file transfers have been ruined by communications and timesharing system crashes. ZMODEM Crash Recovery is a natural result of ZMODEM's use of the actual file position instead of protocol block numbers. Instead of getting angry at the cat when it knocks the phone off the hook, you can dial back and use ZMODEM Crash Recovery to continue the download without wasting time or money. Recent compatible proprietary extensions to ZMODEM extend Crash Recovery technology to support file updates by comparing file contents and transferring new data only as necessary. RELIABILITY For many years the quality of phone lines at Omen's Sauvie Island location has exposed the weaknesses of many traditional and proprietary file transfer protocols. Necessity being the Mother of Invention, I have over the years added "secret tweeks" to my XMODEM an YMODEM protocol handlers to compensate for XMODEM's marginal reliability at higher speeds. Kermit is easier to make reliable because Kermit does not depend on single character control sequences (ACK/NAK/EOT) used by XMODEM, YMODEM, SEAlink, etc. But, as we have seen, Kermit is too slow in many applications. One advantage of starting ZMODEM's design "from scratch" was the opportunity to avoid the mistakes that plague the old protocols. (I could always make new ones.) Two ideas express the sine qua non of reliable protocol design. The truism: "Modem speak with forked tongue" bites in many ways. While no protocol is error-proof, a protocol is only as reliable as its weakest link, except when tested on a table top. ZMODEM obeys this precept by protecting every data and command with a 16 or 32 bit CRC. Another protocol design commandment is: "Don't burn your bridges until you are absolutely positively certain you really have crossed them." ZMODEM's use of the actual file position instead of block numbers helps, but the program logic must be carefully designed to prevent race and deadlock conditions. The basic ZMODEM technology was introduced into the public domain in 1986. Many perceptive programmers and theoreticians have examined and implemented ZMODEM in their programs. They have exposed weaknesses in ZMODEM which go uncorrected in undocumented proprietary protocols. ZMODEM today is a stable, reliable protocol supported by communications programs from dozens of major authors. post your ping,upload,download speed, back in the day   Back to the games boys and girls

Is the Web heading toward redirect hell? 5014662087_aa388e7249_o.png Google is doing it. Facebook is doing it. Yahoo is doing it. Microsoft is doing it. And soon Twitter will be doing it. We’re talking about the apparent need of every web service out there to add intermediate steps to sample what we click on before they send us on to our real destination. This has been going on for a long time and is slowly starting to build into something of a redirect hell on the Web. And it has a price. The overhead that’s already here There’s already plenty of redirect overhead in places where you don’t really think about it. For example: Every time you click on a search result in Google or Bing there’s an intermediate step via Google’s servers (or Bing’s) before you’re redirected to the real target site. Every time you click on a Feedburner RSS headline you’re also redirected before arriving at the real target. Every time you click on an outgoing link in Facebook, there’s an inbetween step via a Facebook server before you’re redirected to where you want to go. And so on, and so on, and so on. This is, of course, because Google, Facebook and other online companies like to keep track of clicks and how their users behave. Knowledge is a true resource for these companies. It can help them improve their service, it can help them monetize the service more efficiently, and in many cases the actual data itself is worth money. Ultimately this click tracking can also be good for end users, especially if it allows a service to improve its quality. But… Things are getting out of hand If it were just one extra intermediary step that may have been alright, but if you look around, you’ll start to discover more and more layering of these redirects, different services taking a bite of the click data on the way to the real target. You know, the one the user actually wants to get to. It can quickly get out of hand. We’ve seen scenarios where outgoing links in for example Facebook will first redirect you via a Facebook server, then a URL shortener (for example bit.ly), which in turn redirects to a longer URL that in turn will result in several additional redirects before you FINALLY reach the target. It’s not uncommon with three or more layers of redirects via different sites that, from the perspective of the user, are pure overhead. The problem is that that overhead isn’t free. It’ll add time to reaching your target, and it’ll add more links (literally!) in the chain that can either break or slow down. It can even make sites appear down when they aren’t, because something on the way broke down. And it looks like this practice is only getting more and more prevalent on the Web. A recent case study of the “redirect trend”: Twitter Do you remember that wave of URL shorteners that came when Twitter started to get popular? That’s where our story begins. Twitter first used the already established TinyURL.com as its default URL shortener. It was an ideal match for Twitter and its 140-character message limit. Then came Bit.ly and a host of other URL shorteners who also wanted to ride on the coattails of Twitter’s growing success. Bit.ly soon succeeded in replacing TinyURL as the default URL shortener for Twitter. As a result of that, Bit.ly got its hands on a wealth of data: a big share of all outgoing links on Twitter and how popular those links were, since they could track every single click. It was only a matter of time before Twitter wanted that data for itself. And why wouldn’t it? In doing so, it gains full control over the infrastructure it runs on and more information about what Twitter’s users like to click on, and so on. So, not long ago, Twitter created its own URL shortener, t.co. In Twitter’s case this makes perfect sense. That is all well and good, but now comes the really interesting part that is the most relevant for this article: Twitter will by the end of the year start to funnel ALL links through its URL shortener, even links already shortened by other services like Bit.ly or Google’s Goo.gl. By funneling all clicks through its own servers first, Twitter will gain intimate knowledge of how its service is used, and about its users. It gets full control over the quality of its service. This is a good thing for Twitter. But what happens when everyone wants a piece of the pie? Redirect after redirect after redirect before we arrive at our destination? Yes, that’s exactly what happens, and you’ll have to live with the overhead. Here’s an example what link sharing could look like once Twitter starts to funnel all clicks through its own service: Someone shares a goo.gl link on Twitter, which automatically gets turned into a t.co link. When someone clicks on the t.co link, they will first be directed to Twitter’s servers to resolve the t.co link to the goo.gl link and redirect it there. The goo.gl link will send the user to Google’s servers to resolve the goo.gl link and then finally redirect the user to the real, intended target. This target may then in turn redirect the user even further. It makes your head spin, doesn’t it? More redirect layers to come? About a year ago we wrote an article about the potential drawbacks of URL shorteners, and it applies perfectly to this more general scenario with multiple redirects between sites. The performance, security and privacy implications of those redirects are the same. We strongly suspect that the path we currently see Twitter going down is a sign of things to come from many other web services out there who may not already be doing this. (I.e., sampling and logging the clicks before sending them on, not necessarily using URL shorteners.) And even when the main services don’t do this, more in-between, third-party services like various URL shorteners show up all the time. Just the other day, anti-virus maker McAfee announced the beta of McAf.ee, a “safe” URL shortener. It may be great, who knows, but in light of what we’ve told you in this article it’s difficult not to think: yet another layer of redirects. Is this really where the Web is headed? Do we want it to? Back to the games boys and girls

China Grabs Supercomputing Leadership Spot in Latest Ranking of World’s Top 500 Supercomputers TOP 10 Systems - 11/2010 1 Tianhe-1A - NUDT TH MPP, X5670 2.93Ghz 6C, NVIDIA GPU, FT-1000 8C 2 Jaguar - Cray XT5-HE Opteron 6-core 2.6 GHz 3 Nebulae - Dawning TC3600 Blade, Intel X5650, NVidia Tesla C2050 GPU 4 TSUBAME 2.0 - HP ProLiant SL390s G7 Xeon 6C X5670, Nvidia GPU, Linux/Windows 5 Hopper - Cray XE6 12-core 2.1 GHz 6 Tera-100 - Bull bullx super-node S6010/S6030 7 Roadrunner - BladeCenter QS22/LS21 Cluster, PowerXCell 8i 3.2 Ghz / Opteron DC 1.8 GHz, Voltaire Infiniband 8 Kraken XT5 - Cray XT5-HE Opteron 6-core 2.6 GHz 9 JUGENE - Blue Gene/P Solution 10 Cielo - Cray XE6 8-core 2.4 GHz Thu, 2010-11-11 22:42 MANNHEIM, Germany; BERKELEY, Calif.; and KNOXVILLE, Tenn.—The 36th edition of the closely watched TOP500 list of the world’s most powerful supercomputers confirms the rumored takeover of the top spot by the Chinese Tianhe-1A system at the National Supercomputer Center in Tianjin, achieving a performance level of 2.57 petaflop/s (quadrillions of calculations per second). News of the Chinese system’s performance emerged in late October. As a result, the former number one system — the Cray XT5 “Jaguar” system at the U.S. Department of Energy’s (DOE) Oak Ridge Leadership Computing Facility in Tennessee — is now ranked in second place. Jaguar achieved 1.75 petaflop/s running Linpack, the TOP500 benchmark application. Third place is now held by a Chinese system called Nebulae, which was also knocked down one spot from the June 2010 TOP500 list with the appearance of Tianhe-1A. Located at the National Supercomputing Centre in Shenzhen, Nebulae performed at 1.27 petaflop/s. Tsubame 2.0 at the Tokyo Institute of Technology is number four; having achieved a performance of 1.19 petaflop/s. Tsubame is the only Japanese machine in the TOP10. At number five is Hopper, a Cray XE6 system at DOE’s National Energy Research Scientific Computing (NERSC) Center in California. Hopper just broke the petaflop/s barrier with 1.05 petaflop/s, making it the second most powerful system in the U.S. and only the third U.S. machine to achive petaflop/s performance. Of the Top 10 systems, seven achieved performance at or above 1 petaflop/s. Five of the systems in the Top 10 are new to the list. Of the Top 10, five are in the United States and the others are in China, Japan, France, and Germany. The most powerful system in Europe is a Bull system at the French CEA (Commissariat à l'énergie atomique et aux énergies alternatives or Atomic and Alternative Energies Commission), ranked at number six. The full TOP500 list and accompanying analysis will be discussed at a special Nov. 17 session at the SC10 Conference on High Performance Computing, Networking, Storage and Analysis being held Nov. 13-19 in New Orleans, La. Accelerating Performance The two Chinese systems and Tsubame 2.0 are all using NVIDIA GPUs (graphics processing units) to accelerate computation. In all, 17 systems on the TOP500 use GPUs as accelerators, with 6 using the Cell processor, ten of them using NVIDIA chips and one using ATI Radeon chips. China is also accelerating its move into high performance computing and now has 42 systems on the TOP500 list, moving past Japan, France, Germany and the UK to become the number two country behind the U.S. Geographical Shifts Although the U.S. remains the leading consumer of HPC systems with 275 of the 500 systems, this number is down from 282 in June 2010. The European share – 124 systems, down from 144 — is still substantially larger than the Asian share (84 systems — up from 57). Dominant countries in Asia are China with 42 systems (up from 24), Japan with 26 systems (up from 18), and India with four systems (down from five). In Europe, Germany and France caught up with the UK, which dropped from the No. 1 European nation from 38 six months ago to 24 on the newest list. Germany and France passed the UK and now have 26 and 25 systems each, although France is down from 29 and Germany is up 24 systems compared to six months ago. Other Highlights from the Latest List · Cray Inc., the U.S. firm which was long synonymous with supercomputing, has regained the number two spot in terms of market share measured in performance, moving ahead of HP, but still trailing IBM. Cray’s XT and XE systems remain very popular for big research customers, four of which are in the Top 10. · HP is still ahead of Cray measured in the number of systems, and both are trailing IBM. · Intel dominates the high-end processor market, with 79.6 percent (398) of all systems using Intel processors, although this is slightly down from six months ago (406 systems, 81.2 percent). · Intel is now followed by the AMD Opteron family with 57 systems (11.4 percent), up from 47. The share of IBM Power processors is slowly declining with now 40 systems (8.0 percent), down from 42. · Quad-core processors are used in 73 percent (365) of the systems, while 19 percent (95 systems) are already using processors with six or more cores. In Just Six Months · The entry level to the list moved up to 31.1 teraflop/s (trillions of calculations per second) on the Linpack benchmark, compared to 24.7 Tflop/s six months ago. · The last-ranked system on the newest list was listed at position 305 in the previous TOP500 just six months ago. This turnover rate is about average after the rather low replacement rate six months ago. · Total combined performance of all 500 systems has grown to 44.2 Pflop/s, compared to 32.4 Pflop/s six months ago and 27.6 PFlop/s one year ago. Some Final Notes on Power Consumption Just as the TOP500 List has emerged as a standardized indicator of performance and architecture trends since it was created 18 years ago, the list now tracks actual power consumption of supercomputers in a consistent fashion. Although power consumption is increasing, the computing efficiency of the systems is also improving. Here are some power consumption notes from the newest list. · Only 25 systems on the list are confirmed to use more than 1 megawatt (MW) of power. · IBM’s prototype of the new BlueGene/Q system set a new record in power efficiency with a value of 1,680 Mflops/watt, more than twice that of the next best system. · Average power consumption of a TOP500 system is 447 kilowatts (KW) and average power efficiency is 195 Mflops/watt (up from 150 Mflops/watt one year ago). · Average power consumption of a TOP10 system is slowly raising with now 3.2 MW (up from 2.89 MW six month ago) and average power efficiency is 268 Mflops/watt, down from 300 Mflops/watt six month ago. Back to the games boys and girls    |